Podcast: Is Liquid Cooling Inevitable?

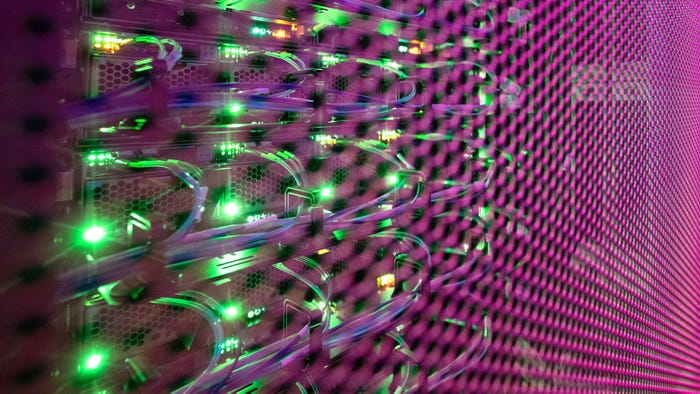

Modern server hardware is getting hotter – at some point, liquid cooling will present the only option for keeping up.

Find the episode and subscribe to Uptime with DCK on Apple Podcasts and Spotify.

In the latest episode of Uptime with DCK, we take a detailed look at liquid cooling with Dattatri Mattur, director of hardware engineering at Cisco.

With upcoming mainstream server CPUs expected to consume around 400W of power, GPUs already consuming more than that (Nvidia’s H100 requires ~700W per board), and with terabytes of memory per server, the cutting-edge IT workloads of the future will be almost impossible to cool with air alone.

Liquid cooling to the rescue

In this podcast, we discuss thermal design points of modern processors, the cooling requirements of edge computing, and the impact of new workloads on power consumption.

We also look at some of the barriers facing the wider deployment of liquid cooling, and the enduring importance of server fans.

“This is going to take some time,” Mattur told Data Center Knowledge. “This is not an overnight, forklift change to the entire data center."

See the full transcript below:

Max Smolaks: Hello and welcome to Uptime with Data Center Knowledge, the podcast that brings you the news and views from the global data center industry. I'm Max Smolaks, senior editor at Data Center Knowledge, and in this episode we will discuss liquid cooling in the data center, where it is today, and where it is going. To look at the subject in detail, I'm pleased to welcome Dattatri Mattur, director of hardware engineering at Cisco. Hello, Dattatri, and welcome to the show.

Dattatri Mattur: Thank you, Max, for hosting this event. And I'm excited to talk about what we are doing for liquid cooling at Cisco.

MS: Absolutely. And first, can you tell us why you're investigating liquid cooling? After all, Cisco does not make cooling equipment, at least not to my knowledge. So why why are you interested?

DM: Absolutely, I can tell you why we are interested. I know many people mistake Cisco for Sysco, the produce company. Similar to that, people are wondering why Cisco is into liquid cooling here. What is happening in the last few years, or maybe in the last five years, you see the power consumed by the various components in the system has gone up significantly.

And just for example, the M3 generation of servers that we had in the market has had CPU TDP at something like 140 watts. In the next generation, which is going to be M7 that we will be shipping later this year, we'll have CPU consuming in the north of 350 watts. So it's almost 3x. And within few years, it will exceed 400 watts. So it is going to become impossible to cool these CPUs just with air cooling, we need to find a different mechanism - hence liquid cooling is getting more traction.

MS: And engineers have been trying to introduce liquid cooling into the traditional data center for at least a decade, probably longer if we're talking about HPC systems. So how has the conversation around the subject changed? You know, like, what are the drivers? If we want to go to the basics - why are chips becoming so powerful?

DM: There are many drivers and I'll classify them into key three aspects. One is the component power. And I'll get into some detail. The second one is the sustainability and regulatory things are kicking in, you have been hearing about net zero by 2040 or 2045 by various countries. And then third one that is going to drive the liquid cooling is edge growth, as people start deploying compute and network infrastructure in the edge. It's very small, close spaces that they should be able to cool, and that's one of the reasons.

Now going back to the component power. The CPU TDP have gone up significantly, as I stated in the two previous questions; you're going from 100 watts to 400 watts by 2025. Memory is another thing is exploding, memory power. At Cisco, we are already have 64GB and 128GB as our highest sellers of memory modules. Typical server now goes out with an average 800GB to a Terabyte of memory. So, the memory power is going up. And the third thing which is driving [server power consumption] is the GPU, the emergence of AI and ML, and that drives the requirement to call the GPUs which are in the vicinity of 300 to 400 watts.

Of course, on sustainability, countries are mandating new power usage effectiveness, saying that data center cannot exceed beyond 1.3 and in some countries, they are even getting aggressive to 1.2, or 1.25. How can they make this meet these requirements and become a net zero compliant is one of the key drivers which is making this move to liquid cooling becoming mandatory, as we get towards the later part of this decade.

MS: Okay, thank you. So, we talked a little bit about the drivers of why the customers are interested, but what happens when you introduce these systems into the data center? What are some benefits, maybe some drawbacks of liquid cooling systems in terms of data center operation? Because, to implement liquid cooling, you essentially need to re-architect your data hall, you need to make considerable adjustments. So, why are people doing that?

DM: Of course, we talked about the drivers, why the people are looking at adopting liquid cooling, but let me tell you the disadvantages. Other than hyperscalers, a lot of enterprise and commercial users have data centers which are built out over the last 10-15 years. They cannot want lgo ahead and modify this to adapt to new liquid cooling standards or methodologies. And that's the biggest Achilles' heel, I would call our problem. Having said that, they're also seeing the necessity why they had to adapt, both from a sustainability standpoint, as well as from a performance and TCO standpoint. Your workload requirements, and the the various aspects of scale-out technology, compelling them to adapt these newer GPUs, newer CPUs, more memory, and they are left with no choice.

So, this is where they are coming in asking vendors like us, how can you make us evolve into liquid cooling? Because this is going to take some time, this is not an overnight forklift change for the entire data center, it will progress over the years. In most of the the existing data centers, they will retrofit this. In some of the greenfield deployments, they might start from scratch, where they have all the flexibility to redo the data center. But our focus is: how do we enable our existing customers to evolve and adapt to the liquid cooling?

MS: Yeah, and this just like data center used to be sort of like the domain of the electrical engineer, but soon it's going to become the domain of the plumbing engineer, and the pipes and the gaskets and all of that. Do you think this technology has suffered from its heritage? Because you have pointed out that it originated with PC enthusiasts and gaming hardware? And I think right now is a good time to ask: is your PC water-cooled?

DM: Yes. So my son plays gaming, I bought him a gaming desktop a few years back. And now he has outgrown that. And this summer, I'm promising him to get a new one, which is going to be water-cooled, we are already looking at what what are the new things? And what what is that he should be adapting? You're absolutely right.

Having said that, the water cooling comes with its own headaches. Nobody, none of our customers want to hear about water cooling and leaks in the racks, where one leak can bring down the entire rack or several racks. That is the biggest drawback of this technology, why people are very hesitant to adapt. Having said that, like any any other technology, it has evolved and we can discuss more about what are the different types of cooling, how we are addressing some of these challenges associated with leaks and whatnot. And what are we doing to make it very reliable?

MS: Absolutely. It's mission-critical infrastructure. And downtime is pretty much the worst thing that can happen. So anything, anything that causes downtime is the enemy. So, so you've mentioned this, but there are several approaches to liquid cooling, there is immersion cooling, where it's just large vats of dielectric fluid, and direct to chip cooling, which involves a lot more pipes. So, you've looked into this in detail, and among all of these variants, which one do you think has the most legs in the data center in the near term?

DM: Sure, but before I provide Cisco's view or my personal view, I want to just walk you through a high-level what are the different technologies that are getting traction. So, if you look at it from a liquid cooling technology, that is being adapted for data center, both in compute and some of the high-end networking gear, we can classify them into two major types. One is immersion-based cooling, the other one is cold plate-based cooling. So, immersion-based cooling, again, you can sub-classify that into two or three types: single-phase immersion or two-phase immersion, both have advantages, disadvantages, efficiency, and then what is called global warming parameter: how [environmentally] friendly it is. Similarly, when you go to cold plate cooling, we have both the single-phase and two-phase. Both have advantages and disadvantages.

The advantage with immersion is it gives you flexibility because everything is [built from the] ground up, including your data center design, the way hardware is designed, you're not adapting to something legacy and trying to make it work. As a result, you get a better PUE factor, power usage effectiveness. Whereas some of the cold plate, the way it's been deployed, is more of an evolutionary technology to retrofit in the existing data center. So, we have heard several customers asking for immersion. And we have done some deployment, but not a lot. That's not exactly in the compute, but in the networking space, that has been tried out at Cisco.

But what we are right now going after, we know immersion eventually gets there. But for us to evolve this into liquid cooling, we are focusing on cold plate liquid cooling. The reason is very simple: our customer base wants to adapt this to the existing data center infrastructure, they're not going to change everything overnight.

The way we are working on this is: even in cold plate cooling, we have classified that into a closed loop cooling, and open loop cooling. At high level, what is closed loop cooling, that means you have an existing rack, everything in that 1U of your server, the entire liquid cooling is built in and sealed. Even with leaks or anything, it should be self-contained to that particular rack, it should not spill over to other racks. That way, it's like deploying any other rack servers today that they had, or a blade server. So they're not changing the foundational infrastructure, they're retaining assets.

That has its own drawbacks. As you can see, we have to work within the current envelope of physics in terms of real estate, what we can do with respect to the liquid, how we build the I would say a radiator, a condenser, and the pumping system. It all has to be miniaturized, it all has to be field-replaceable. And that's one technology we are going after, so that it gives them a path to move forward.

The second technology we are going after is called open loop cooling, where instead of building this into the same 1U server, for your 42U rack, we provide a unit called Cooling Distribution Unit, or CDU we call it, that is part of the entire rack; you put that, the rack is also managed by Cisco eventually, through your management system. From there you have the fittings, the hot pipe and cold pipe, which are plug-and-play kind of fittings, you can connect it to your rack servers, so that you have cold liquid going in and hot liquid coming out of your server, get recycled, and it goes back into into the server to cool this again. These are the two technologies we are going after right now.

MS: And again, we mentioned that these systems really grew out of gaming, and that doesn't really inspire enterprise levels of confidence. Do you think that the situation has changed, that people understand the systems better? Data center operators, data center users, insurers - because somebody needs to insure that facility? Do you think at all levels of the stack, we now understand this a little bit better? We trust it a little bit more? And this is why, slowly but surely, this technology is finally getting embrace after 10 years of you know like...

DM: You're absolutely right. This is now being deployed - I have some data here, let me see if I can quickly provide it. There is market research data from BIS based on both Intel and AMD's deployment. Currently it's about $1.43 billion of liquid cooling technologies being deployed in the data center already, and they are expecting a CAGR of about 25 to 30% between 2021 to 2026. Why is this happening? One, the performance requirements, the workload requirements are driving higher core count, higher memory densities, GPUs, and for some of these workloads, there is no other easy solution, you have to adapt liquid cooling. As a result, as I stated previously, there are several technologies being brought out. And especially, the reliability and availability of the solution has improved significantly, I mean, if you look at the new [cooling equipment] that is coming out to help address the leaks, the performance, even if it leaks, it should not damage, or it will not damage the other servers or other infrastructure in the racks, it will evaporate. That's the kind of technology they are looking at. So as a result, there is no collateral damage, that's one thing that's being ensured.

Second thing is this how the piping, the condenser, or the radiators, or the pumps are being designed. There are parallel mechanisms built in, even if one fails, the other takes over even if the both of them fail, some cooling kicks in, and as a result, your system is not dead.

The third point I would like to make is when we say liquid cooling in this evolution of either closed loop or open loop with cold plate, we are not making it 100% liquid cooling. It is a hybrid technology where you still have the fans spinning, in conjunction with the liquid cooling. It's more of an assist, I would say. Fan is the assist, liquid cooling takes over majority. But as you can imagine, if there is one or the other failure, there is some kind of mechanism put in so that you can limp along, and provide a service window to your service provider or data center operator to go address that. Some of these things provide a better confidence to data center operators to start adapting these technologies.

MS: That sounds very positive. And yes, you're absolutely right. It feels like there's more recognition for this tech. And another aspect that is obviously very influential right now is a move towards more sustainability in the industry, right? You've mentioned some of these targets. These targets are very ambitious. Some of these are 2030 targets, not 2040 targets and obviously it is a very quick timeline. So my last question is, do you think this drive towards more sustainable practices will benefit liquid cooling adoption, that people who have perhaps been putting it off for other reasons, are going to be like: Is it going to help us help make us more sustainable? Is it going to cut down our electricity bills? Is it going to look good on the Corporate Social Responsibility report?

DM: Certainly, I mean, I'll give you a very simple answer to this. If you look at a 2U rack server, we pack at least 18 to 20 of these in a 42U rack. With the current CPUs, and memory, and GPUs, 10 to 15% of this one server [power] is consumed by fans today. So that that can be anywhere in the vicinity of 175 to 250 watts. Now, if you have 20 of those servers, you're talking about something in the vicinity of 4,000 watts, four kilowatts. What can we do to reduce that power?

What we have seen, based on some of the testing and the calculations that have been done on a 2U rack server with the latest greatest CPUs we are seeing from Intel and AMD, we can bring almost 60% [...] improvement by adopting one of the liquid cooling techniques. What do I mean by that? That means I can probably reduce 100 to 220 watts of fan power by enabling liquid cooling to probably five to 10 watts max, to do the pumps and whatnot. Liquid cooling also requires some power, because as I mentioned, either I have to have a cooling distribution unit, which has a huge radiator and condenser. They all need motors to run, and then there is electronics. But you can see the reduction in power, 60% is a big number.

There are customers now asking in order to reach their sustainability targets, can I deploy liquid cooling? Not just to get the highest-end TDP, even in a modern TDP system, can I deploy liquid cooling and cut down the overall power consumption by the rack units? So we are looking seriously, you will see that is being enabled down the line in a few years. Not just for enabling the high-end TDP and high-end GPUs, but also help bridge or meet these targets for sustainability. It is going to happen.

MS: And that's a positive message. And if the industry manages to cut down on its [power] consumption, improve its carbon footprint, everybody wins. So thank you for this in-depth look, this has been interesting, educational, entertaining, and good luck with your son's PC. I hope it's one of those systems with with fluorescent coolant, where you need to top it up, because those are just beautiful. It has been an absolute pleasure. Hopefully speak to you again, but for now, good luck with your work.

About the Author

You May Also Like